The British Standards Institution has published an international standard, the first of its kind, regarding the safe management of artificial intelligence, according to a report from the German news agency.

The British news agency “BA Media” reported today, Tuesday, that the new guidance standards in the United Kingdom specify how to establish a management system for artificial intelligence, implement it, maintain it, and continuously improve it, with a focus on guarantees.

The “British National Standards Institution” has released guidelines that provide directions on how companies should develop and responsibly deploy artificial intelligence tools internally and externally.

This comes amid ongoing debate about the need to regulate fast-moving technology that has become increasingly prominent over the past year, thanks to the widespread release of generative artificial intelligence tools like “ChatGPT.”

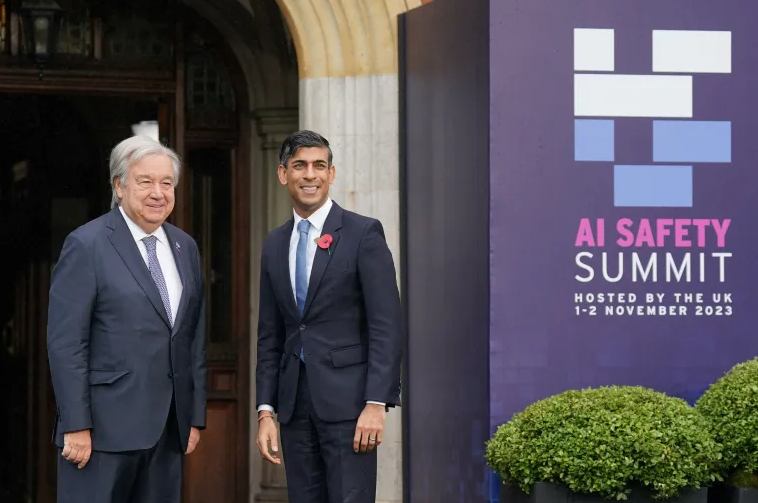

The United Kingdom hosted the first global summit on the safety of artificial intelligence use in November of last year, where world leaders and major technology companies from around the world gathered to discuss the safe and responsible development of artificial intelligence technology, as well as the potential short and long-term risks posed by the technology.

According to the report, these risks include the use of artificial intelligence to create malicious programs for launching cyber-attacks, to the extent that it may pose a potential existential threat to humanity if humans lose control over it.

The CEO of the British National Standards Institution, Susan Taylor Martin, commented on the new international standard, stating that “artificial intelligence is transformative technology, and trust is of paramount importance for it to be a force working for good.” She added that “publishing the first international standard for artificial intelligence management systems is an important step towards enabling organizations to manage technology responsibly.”

Leave a Reply